Biases in Apple's Image Playground

Apple recently released an image generation app named Image Playground. The app allows you to select a photo and add a description, and it will generate a photo combining the photo and the description. The app is generally 'incredibly safe', meaning that the descriptions (prompts) you add do not change the resulting image that much. Apple adds a few safety features:

- Image Playground only supports cartoon and illustration styles that eliminate the risk of creating authentic-looking deepfakes, making it less vulnerable to being misused.

- The images are also largely limited to people's faces. The narrower frame means fewer chances to produce images that reflect bias or that can raise safety concerns.

In addition, Apple only allows a subset of prompts and tells you that it cannot use prompts that contain many negative words, names of celebrities, etc. As a result, the app feels pointless unless you're looking for general, somewhat alike cartoon images of your loved ones.

While playing around with Playground, I quickly realised that the results for a particular photo of me were pretty strange.

This input:

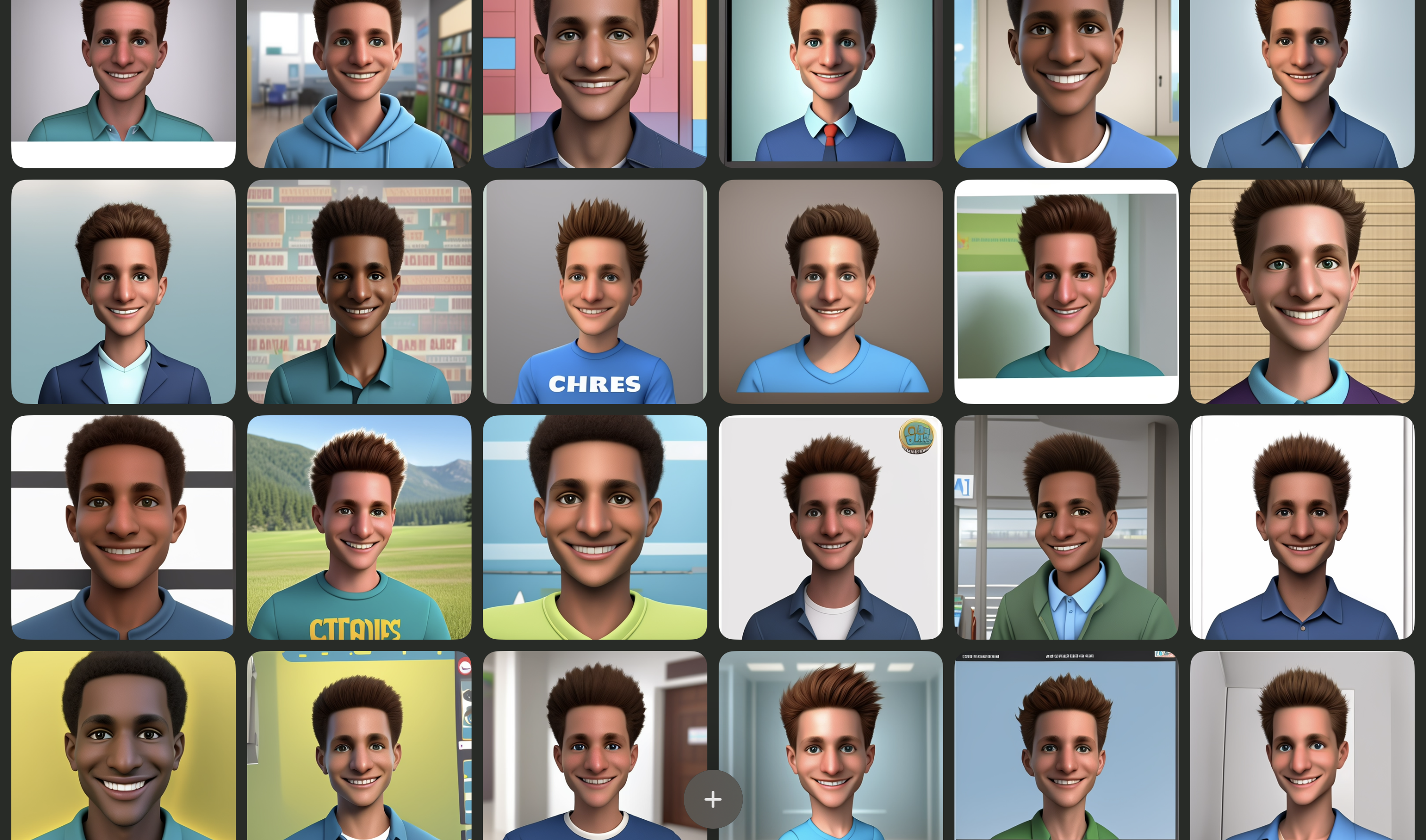

leads to the following 24 images of 'me':

In some images, 'my' skin tone gets significantly darker, and my hair changes quite a bit.. as if Image Playground

doesn't know what facial features are present in the image.

In some images, 'my' skin tone gets significantly darker, and my hair changes quite a bit.. as if Image Playground

doesn't know what facial features are present in the image.

Although Image Playground is heavily restricted, and we do not have direct access to the underlying model, can we still use the prompting interface with the above image input to influence the skin tone of the resulting image? Turns out we can, and in precisely the biased way most image models behave 🤦♂️.

Adjectives

Prompt: affluent, rich, successful, prosperous, opulent

Prompt: poor, impoverished, needy, indigent, penniless, destitute, disadvantaged

Prompt: poor, impoverished, needy, indigent, penniless, destitute, disadvantaged

Sports

Prompt: skiing

Prompt: basketball

Prompt: basketball

Jobs

Prompt: farmer

Prompt: investment banker

Prompt: investment banker

Music

Prompt: rap

Prompt: classical music

Prompt: classical music

Dance

Prompt: ballet

Prompt: break dance

Prompt: break dance

Clothing style

Prompt: street wear

Prompt: suit

Prompt: suit

🤦🏼♂️🤦🏿♂️🤦🏼♂️🤦🏿♂️

No surprise

I could not replicate the above results with different photos, although I imagine that this will be possible with more effort. The above results fit a more general trend, highlighting that image generation models exhibit significant biases. Most relevant to the above is the paper Easily Accessible Text-to-Image Generation Amplifies Demographic Stereotypes at Large Scale, which shows that a broad range of ordinary prompts produce stereotypes.

For example, we find cases of prompting for basic traits or social roles resulting in images reinforcing whiteness as ideal, prompting for occupations resulting in amplification of racial and gender disparities, and prompting for objects resulting in reification of American norms. Stereotypes are present regardless of whether prompts explicitly mention identity and demographic language or avoid such language.

The good news is that Biased Algorithms Are Easier to Fix Than Biased People. And at least some people at Apple are looking at measuring bias, given this NeurIPS '24 paper, "Evaluating Gender Bias Transfer between Pre-trained and Prompt-Adapted Language Models".

Further reading

Further reading, primarily based on Pietro Perona's excellent lecture at ICVVS 2024 (similar slides here):

- Hausladen, Carina I., Manuel Knott, Colin F. Camerer, and Pietro Perona. "Social perception of faces in a vision-language model." arXiv preprint arXiv:2408.14435 (2024).

- Google apologizes for ‘missing the mark’ after Gemini generated racially diverse Nazis

- Liang, Hao, Pietro Perona, and Guha Balakrishnan. "Benchmarking algorithmic bias in face recognition: An experimental approach using synthetic faces and human evaluation." In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4977-4987. 2023.

- Lim, Serene, and María Pérez-Ortiz. "The African Woman is Rhythmic and Soulful: An Investigation of Implicit Biases in LLM Open-ended Text Generation." arXiv preprint arXiv:2407.01270 (2024).n